Introduction

In the sphere of cloud management, ensuring uninterrupted service is of paramount importance. However, challenges can emerge, affecting the smooth operation of services. Recently, a noteworthy issue surfaced with a customer – the ‘VMware Cloud Director service crashing when Container Service Extension communicates with VCD.’ This article delves into the symptoms, causes, and, most crucially, the solution to address this challenge.

It’s important to note that the workaround provided here is not an official recommendation from VMware. It should be applied at your discretion. We anticipate that VMware will release an official KB addressing this issue in the near future. The product versions under discussion in this article are VCD 10.4.2 and CSE 4.1.0.

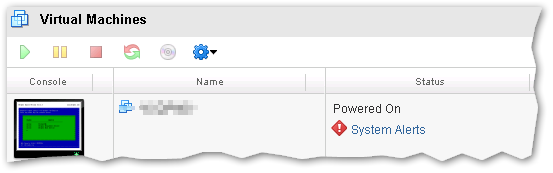

Symptoms:

- The VCD service crashes on VCD cells when traffic from CSE servers is permitted.

- The count of ‘BEHAVIOR_INVOCATION’ operation in VCD DB is quite high (more than 10000).

vcloud=# select count(*) from jobs where operation = 'BEHAVIOR_INVOCATION';

count

--------

385151

(1 row)- In the logs, you may find the following events added in cell.log:

Successfully verified transfer spooling area: VfsFile[fileObject=file:///opt/vmware/vcloud-director/data/transfer]

Cell startup completed in 1m 39s

java.lang.OutOfMemoryError: Java heap space

Dumping heap to /opt/vmware/vcloud-director/logs/java_pid14129.hprof ...

Dump file is incomplete: No space left on device

log4j:ERROR Failed to flush writer,

java.io.IOException: No space left on device

at java.base/java.io.FileOutputStream.writeBytes(Native Method)

Cause:

The root cause of this issue lies in VCD generating memory heap dumps due to an ‘OutOfMemoryError’ due to this, in turn, leads to the storage space being exhausted and ultimately results in the VCD service crashing.

Resolution:

The good news is that the VMware has identified this as a bug within VCD and plans to address it in the upcoming update releases of VCD. While we eagerly await this update, the team has suggested a workaround in case you encounter this issue:

- SSH into each VCD cell.

- Check the “/opt/vmware/vcloud-director/logs” directory for java heap dump files (.hprof) on each cell.

cd /opt/vmware/vcloud-director/logs

- Remove the files with the “.hprof” extension.

[ /opt/vmware/vcloud-director/logs ]# rm java_xxxxx.hprof- Connect to the VCD database:

sudo -i -u postgres psql vcloud- Delete records of the operations ‘BEHAVIOR_INVOCATION’ from the ‘jobs’ table:

vcloud=# delete from jobs where operation = 'BEHAVIOR_INVOCATION';- Perform a service restart on all the VCD cells serially:

service vmware-vcd restartBy following these steps, you can mitigate the issue and keep your VCD service running smoothly until the official bug fix is released in VCD.